Geer Miao, Analyst

In our previous article, we introduced ESGPT, a generative pre-trained Transformer architecture specifically designed for modeling complex event sequences in continuous time. We outlined its foundational concepts and discussed its relevance to the cryptocurrency domain. In this article, we further investigate ESGPT’s capabilities in processing large-scale, sparsely distributed community-generated data for the purpose of forecasting market volatility.

In cryptocurrency market analysis, raw data sources—such as social media text and on-chain transaction records—are often noisy, incomplete, and sometimes even misleading. Data preprocessing serves as the “gold panning” stage of the pipeline: sifting through the informational debris to extract genuine market signals. It ensures that models learn real patterns rather than noise or spurious correlations. Without effective preprocessing, even the most advanced model will fall victim to the classic principle: Garbage In, Garbage Out (GIGO).

Given the inherently high-noise nature of crypto markets, precise data cleaning is a critical prerequisite for accurate forecasting. For ESGPT to make reliable predictions, the preprocessing pipeline (including data cleaning, temporal alignment, and feature engineering) transforms fragmented community signals into a unified, highly-optimized input template. This process enables ESGPT to train and infer far more efficiently than conventional models.

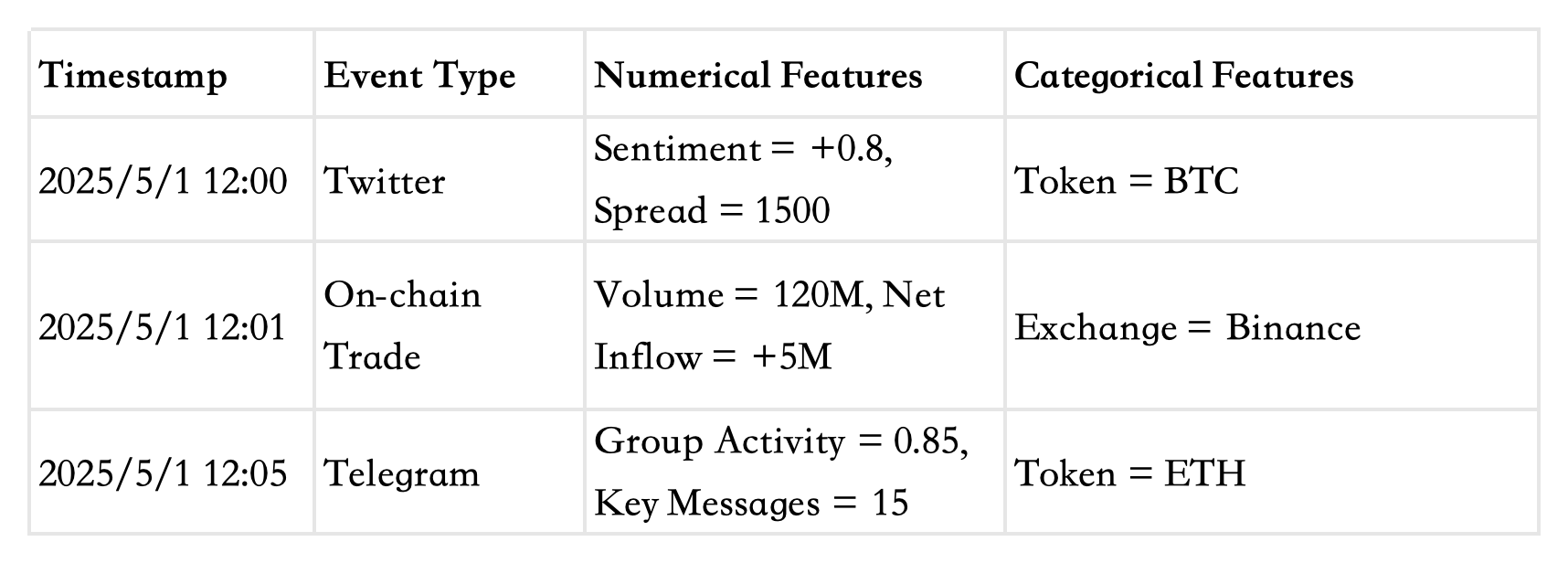

One of ESGPT’s key strengths lies in its ability to convert sparse, scattered data across multiple communities into a structured event stream, allowing the model to capture market signals with precision. ESGPT can handle diverse data modalities simultaneously—ranging from textual inputs (e.g., sentiment from tweets), to numerical features (e.g., trading volume, capital inflows), to categorical variables (e.g., token labels, event types). Through a built-in hierarchical embedding mechanism, it automatically transforms heterogeneous inputs into a unified vector representation, eliminating the need for manual feature engineering.

What the model ultimately "sees" is a sequence of time-stamped events. Below is a sample of the unified input structure (illustrative only, not actual data):

After data normalization, we obtain a clean, standardized, and time-aligned event stream, enabling ESGPT to more accurately capture correlations between community activity and market fluctuations—ultimately supporting more reliable predictions.

When it comes to forecasting, ESGPT leverages autoregressive methods to generate future event sequences. Its predictive power stems not only from advanced data modeling but also from its capacity to incorporate explicit causal logic chains. One of ESGPT’s core strengths lies in its Transformer-based architecture, which autonomously uncovers latent patterns within the market. By analyzing massive volumes of historical data, ESGPT learns complex associations—such as:

-

“When the top three whale groups on Telegram discuss a specific token, there is a 78% probability of a price increase within two hours.”

-

“When there is a divergence between exchange net inflows and social media sentiment, there is an 80% likelihood of a trend reversal.”

The more patterns ESGPT captures, the deeper its understanding of token behavior and market structure becomes. It continuously iterates on its logic, refining its predictive accuracy over time.

Like its applications in the medical domain—where domain knowledge is encoded as structured causal rules—ESGPT can also ingest trader-defined logic related to cryptocurrency markets. This fusion of machine learning with expert insight forms a dual-engine system:

-

During cold starts, manually encoded logic provides a structural foundation, avoiding random predictions when data is sparse.

-

During normal operation, data-driven learning dominates, while manual rules act as validators to filter out anomalies.

-

In extreme market conditions, circuit-breaker rules take precedence to prevent misjudgments caused by abnormal data.

This hybrid framework combines adaptive pattern recognition with the seasoned judgment of human traders, allowing ESGPT to remain both responsive and robust across dynamic market regimes.

Crucially, ESGPT’s predictive power goes far beyond simple directional forecasting. Its core value lies in quantifying volatility with precision. By modeling the multi-platform event stream as an integrated temporal system, ESGPT can estimate the probability intensity of volatility spikes (e.g., “There is a 78% chance of >30% volatility within the next 2 hours”) and identify key time windows (e.g., “Optimal trading period: 13:00–14:30 UTC”).

This fine-grained understanding of market “heartbeat rhythms” empowers traders to position themselves proactively—buying options during low-volatility periods, deploying straddles around predicted volatility bursts, and even applying gamma hedging to construct multi-dimensional profit strategies, where gains can be realized regardless of market direction.

Compared to traditional technical analysis, which often offers vague directional signals, ESGPT’s three-dimensional volatility framework—spanning probability, magnitude, and timing—transforms seemingly random price action into actionable statistical expectations. It becomes a decisive edge in the trader’s toolkit.

评论 (0)