Introduction

The number of crypto assets available on the market is more significant than anyone can analyze and investigate. The information on token design, use cases, and actual economic value to the holder is fragmented and requires additional research in each case, digging into documentation and contracts, and sometimes additionally searching the web for blog posts. All major analytics platforms only provide market metrics and general project descriptions without explicitly focusing on the token features and value behind them.

Valueverse aims to solve the information fragmentation problem by introducing Token Health Metrics to understand token designs and their value. It is a "non-market metrics" view of tokens built on top of tokens' value-capturing features and on-chain data of their practical usage.

We are starting this work with the official Superchain grant provided during the Gitcoin Grants 21 round.

The Current State of Token Evaluation

Currently, tokens are predominantly assessed based on generic market indicators (price, trading volume, market capitalization, etc.). The reality is that, for the most part, these metrics are more of a sentiment play than they are as value investing signals:

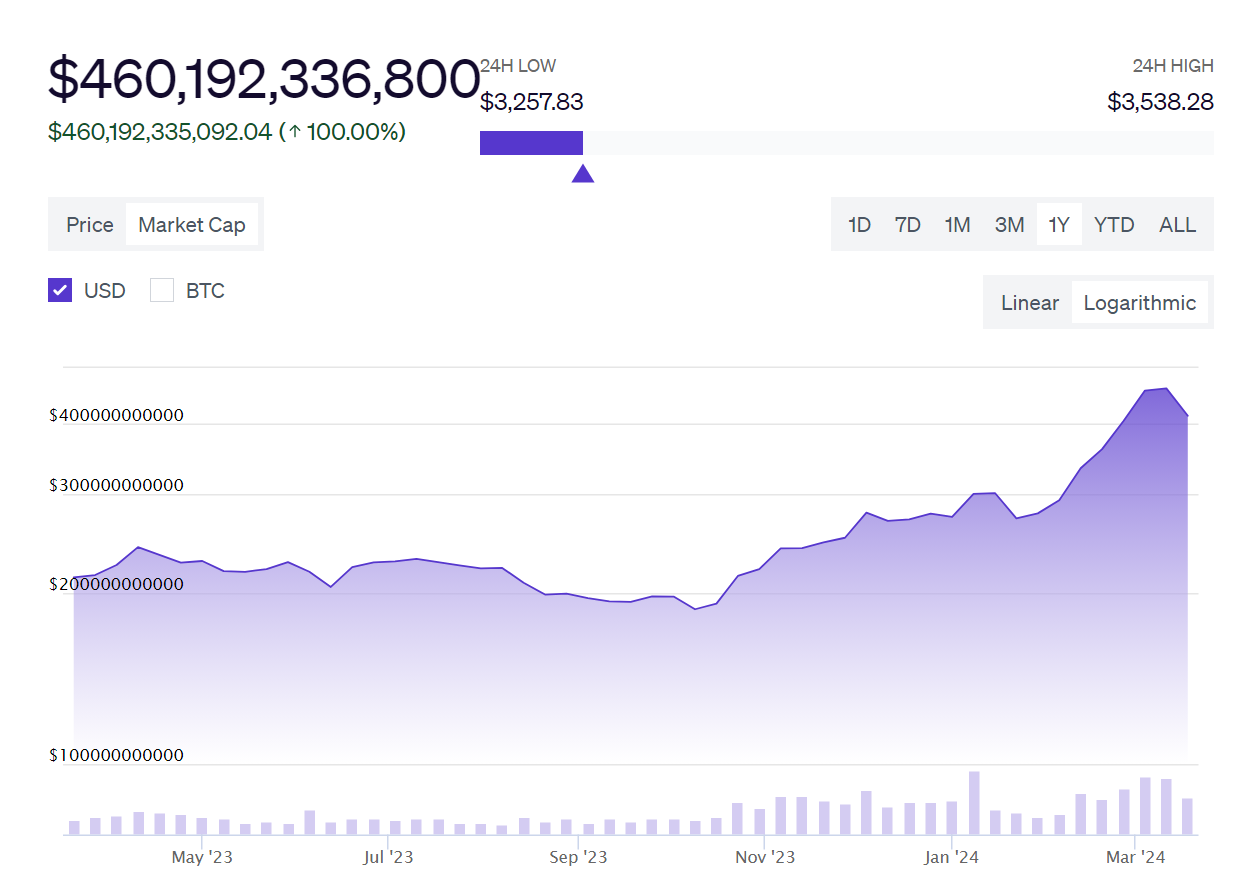

Figure 1

CRV v. PEPE: Comparative Market Capitalization Analysis, 2023-2024

Furthermore, the traditional valuation techniques borrowed from the TradFi world are insufficient to comprehend or evaluate blockchain projects' multi-dimensional pricing and intrinsic value assessment challenges. Many of the metrics most commonly cited -- such as token price or market capitalization, provide almost no information about an underlying token's health or its value-capturing ability regarding the underlying protocol. In addition, those surface-level indicators are not represented in the inherent technology progressions — network effects and ecosystem building blocks; which contribute to long-run valuations.

The New Value-Focused Approach

The proposed approach goes beyond superficial market indicators and focuses on examining the fundamental value drivers within token ecosystems. It considers factors such as tokens' utility functions and ability to capture value, supported by metrics calculated from the on-chain data.

We propose a paradigm shift in understanding tokens’ value based on Vasily Sumanov's Value Capturing Theory principles.

Based on the decomposition of token utility into particular Value Capturing Mechanisms (VCMs) and Origins of Value (OoVs), this new framework aims to provide a holistic view of the actual value of the token's ecosystem. Going beyond traditional metrics and focusing on non-market metrics complementing market metrics, it explores value creation and capture in blockchain projects.

With this new approach, our primary goal is focused on significantly improving our ability to evaluate currently available digital assets and guide the design of more sustainable token ecosystems in the future.

Understanding Health Metrics for Token Ecosystems

This requires a shift in focus on health metrics and an understanding of what a token ecosystem is to harness its power fully.

Defining Health Metrics

Token Ecosystem Health Metrics: How the ecosystem of abstract tokens can be quantified simply by measuring a given protocol's core components, such as governance, incentives, token flows and sustainability.

Where traditional metrics deal with market performance, health metrics mirror the inner dynamics of token ecosystems, which are simply invisible when looking at price alone. Health metrics are meant to fill the missing puzzle piece of emphasis on on-chain data and real-world utility. It provides information on the activity of their users, network effects and whether or not the token serves its intended purpose.

The Importance of Utility-Centric Metrics

This system diverts attention from "How much is this token worth?" to: "What can this token do, and how well is it doing? By tracking token usage, we gain a crucial understanding of the demand and use case for tokens in practice. Utility metrics can be applied in many different capacities depending on the ecosystem and purpose of tokens. For example, when we take the case of a governance token, we might think of the rate of active voters amongst protocol governance. Still, we might also see other things like the value of what is being governed (See Figure 2) and the distribution of voting power. These metrics provide a more fine-grained view of its utility in allowing decentralized decision-making.

Figure 2

MakerDAO Treasury: Asset Distribution and Holdings Composition

In the case of vote-escrow tokenomics, such as Curve or Convex, the metrics could be a share of emissions sent to any pool versus that pool's liquidity, the average lock duration of veTokens, and the aggregate value of bribes. These provide a more detailed look at how this token incentivizes long-term alignment and enables efficient capital allocation in the system.

Figure 3

Convex Finance: CRV Token Locking Patterns

In summary, if we look at more utility-oriented measurements, it helps us better judge the actual value of any given token and its long-term sustainability. Then, we can see which tokens are riding waves of speculation and which are creating value for their respective ecosystems.

Similarly, tracking utility usage may reveal certain risks, vulnerabilities, and potential attack vectors in token ecosystems. It can uncover issues that may lead to collusion, like the ownership concentration of tokens in a few select wallets, to something such as poor usage on some fundamental mechanisms created around the token or just an incentive structure misalignment you may not notice if all we are doing is observing the price.

The Value Capture Theory Approach

The Value Capturing Theory (VCT) introduces a novel framework for understanding and evaluating token ecosystems. The Token Engineering Academy awarded this work the TE Prize. While the academic article is upcoming, we published the details in our previous blog post. Here, we briefly present its fundamentals to provide a better understanding of the Token Health Metrics design proposed thereafter.

The theory contains two main concepts: Origins of Value (OoVs) and Value Capturing Mechanisms (VCMs).

Origins of Value (OoVs)

Origins of Value represent the fundamental economic sources from which a token derives its intrinsic value, which has some utility functions within the protocol or a network. We've identified eight key OoVs:

-

Value Transfer [1]

-

Future Cashflow [2]

-

Governance [3]

-

Access [4]

-

Representation [5]

-

Hedonic Value [6]

-

Risk Exposure [7]

-

Conditional Action[8]

Each OoV contributes uniquely to a token's overall value proposition.

Value-Capturing Mechanisms (VCMs)

Value Capturing Mechanisms are instead sets of interrelated OoVs that describe how value manifests and is captured inside a token ecosystem. VCMs provide a holistic view of a token's role and function within its greater network. The set of existing VCMs and OoVs can be understood from the Token Periodic Table where:

Figure 4

The Periodic Table Structuring VCMs Design Space

How to use it:

-

Rows are destined to the number of OoV's a given VCM contains

-

Columns are assigned for the most impactful OoV in a given VCM.

Let's look at an example with the Work Token classification. A token with this VCM is staked into the protocol or otherwise exposed to slashing, after which tasks must be completed. Completed tasks are rewarded, which can be considered the future cashflow on top of executed work and a token stake. Such VCM can be identified in tokens such as TAO (Bittensor), LPT (Livepeer), and some DePin projects.

On the periodic table, it is situated on the 3rd row since it is composed of 3 different OoVs: Future Cashflow, Risk Exposure and Conditional Action. In this context, Future Cashflow [2] refers to the ongoing revenue or income generated from work contributions within the ecosystem. Risk Exposure [7] encapsulates the risks that participants take by contributing to or interacting with the protocol, such as financial, operational, or technical risks, which often come with staking the asset. Conditional Action [8] emphasizes that the cashflow generation requires contributing some continuous work for the network:

Figure 5

Work Token Model: Value Capture Mechanism Decomposition

Formulating Health Metrics

Tier 1 and Tier 2 Metrics

We build two types of metrics: Tier 1 (primary) and Tier 2 (secondary). Designed to measure the health and performance of those aspects explicitly, these metrics are handpicked. We classify each metric into OoV-specific metrics (most heavily influenced by a single OoV) and coordination-derived ones (affected by multiple OoVs).

These simple metrics drive more complicated statistical measures such as averages, medians and n-standard deviations, or metrics like percent change and rate of change. OoV separates each metric group, and each aspect of metrics is labelled appropriately. We indicate their preferred directions (i.e., whether higher or lower is better).

OoV and VCM Health Metric Scores Calculation

To calculate OoV Health Metric Scores, we will normalize the metrics if needed, calculate their percentile scores for each cohort type and adjust how their readings are illustrated so that higher readings are always better. According to Equation 1, we give weight values for each metric under its OoV category and calculate the weighted average of percentile scores as follows:

Equation 1

Ultimately, to obtain the Total VCM Health Metric Score, we give each OoV a weight depending on how much they matter in assessing the health of that particular VCM. Regarding the specific VCM in question, OoVs acting independently will be expressed as a weighted arithmetic mean. Alternatively, OoVs showing signs of interdependence will be multiplied by each other's respective weighted scores:

Equation 2

The robust methodology will produce personal valuation frameworks that offer worthiness in most token environments. This could be a tremendous analytical framework for analyses of the token ecosystem and even insights into what brings value to future token design.

Leveraging Open Source Observer

Open Source Observer is an innovative, real-time blockchain analytics system with capabilities to track the ongoing impact of open-source software contributions on the long-term health of its ecosystem. Kariba Labs developed the platform as a public good, a unique service that includes access to blockchain and open data on its BigQuery data warehouse.

As a result, OSO becomes the best testing ground for these metrics driven by impact measurement and data availability: most Optimism-native protocols are already supported on the platform.

OSO's Data Pipeline and Capabilities

Figure 6

Open Source Observer: BigQuery Metrics Dashboard

OSO's Data Pipeline is powered by Google BigQuery, whose schema has been optimized for data storage and queries at scale using a large volume of blockchain and open-source data. The pipeline follows a typical dbt-based approach; data goes through staging, intermediate, and mart models. It makes the data more easily usable across many stages of its processing, from raw events to refined metrics.

Supplementing with Alternative Data Sources

While OSO has a complete data set for many blockchain ecosystems, there are certain points when specific data is not available or fully decoded within the OSO framework. In those places, we would supplement our analysis with alternative data sources, including Dune Analytics. This also dictates agility in sensibly maintaining health metrics' range and depth (present, past & future).

The Future of Token Ecosystem Evaluation

With the understanding of token ecosystems developed by OoVs and VCMs, which we explore in this article, a new way to evaluate, design, and grow blockchain projects is on the horizon.

Impact on Project Development and Funding

New and innovative health metrics will change how users & investors allocate their resources more informed and transparently. This transition to health-metrics-influenced-impact financing might offer:

-

Enhanced capacity to spot high-value, ecosystem-growing projects.

-

Increased monitoring of grants delivered & project performance.

-

Better evaluation of the overall project's health contribution to the ecosystem as a whole

Projects can use this to their advantage by resorting to a data-driven methodology that allows them to:

-

Token-level economics & reward system data for the actual use case.

-

Strive to continually iterate and improve token models with feedback from the health metrics.

-

Better align the goals of the project with ecosystem needs and funder expectations.

-

Identify and mitigate potential weak points in token designs.

This will be a landmark approach to how retroactive public goods funding in the Optimism ecosystem has been done thus far. These metrics improve project evaluation in the funding process and equip token holders with rich data to make governance decisions on protocol upgrades, resource allocation, etc. Projects built on the OP Stack and joining the Superchain will understand and be able to measure their impact on Optimism, thus creating a much-improved ecosystem with more true transparency and efficiency. This could later translate into industry-standard best practices for setting up a token ecosystem and calibrating impact measurements, which will make the blockchain sector generally more stable by adopting those methods.

Ecosystem Governance Implications

We anticipate that some of these health metrics will go mainstream and be adopted into how foundations and DAOs manage their treasuries and decide how to allocate resources. Over time, the shift of capital allocation in the blockchain ecosystem will favour such holistic health metrics, ensuring resources are channelled to projects that can show they create actual value and positively impact the ecosystem.

Disclaimer: The content of this document is provided for informational, educational, or discussion purposes only and does not purport to be complete or definitive. It should not be considered as financial, investment or legal advice. While every effort has been made to ensure accuracy and completeness, the author does not guarantee that it is free from errors or omissions and may update, revise, or retract information without prior notice. Readers are advised to consult the most recent version for the latest details and to be aware of the inherent risks of cryptocurrency and blockchain technologies. The author does not make guarantees or representations regarding any discussed tokens or projects and shall not be liable for any direct, indirect, or consequential damages arising from the use or reliance on this content. Mention of any project, token, or protocol does not constitute an endorsement. By accessing this document, readers waive all claims against the author.

Bibliography

-

Dune. (n.d.). Retrieved October 21, 2024, from https://dune.com/

-

Nansen. (n.d.). Retrieved October 21, 2024, from https://www.nansen.ai/

-

Open Source Observer. (n.d.). Retrieved October 21, 2024, from https://www.opensource.observer/

-

Open Source Observer: Documentation. (n.d.). Open Source Observer Docs. Retrieved October 21, 2024, from https://docs.opensource.observer/docs/

-

Santiment. (n.d.). Retrieved October 21, 2024, from https://santiment.net/

-

Sumanov, V. (n.d.). TE Value Capturing Fundamentals: Session 2. Google Docs. Retrieved October 21, 2024, from https://drive.google.com/file/d/1CEN0Tqtjch8nlMkDI1za5_RZV4sBDgdd/view?usp=sharing&usp=embed_facebook

-

Sumanov, V., & Desmarais, J.-L. (n.d.). Token Classification Framework by Consideration of Origins of Value and Mechanisms of Manifestation Thereof. Google Docs. Retrieved October 21, 2024, from https://docs.google.com/document/d/1I1T_ftDnt7E_y5C9mlqTomxd6WhYUh1r/edit?usp=sharing&ouid=116362223184273470428&rtpof=true&sd=true

About Valueverse Research

Valueverse is a research lab focused on economic research in web3, particularly on mechanism design and the economic foundations of token utility.

The lab develops and maintains a classification framework for token analysis and design that considers the origins of value and the value-capturing mechanisms that manifest them.

More updates at Valueverse X account.

评论 (0)