Our Hardware-Software Co-Optimization Solution: To address both model verification and data encryption jointly, we design an integrated approach to achieve leading SP performance in our system. Specifically, through the combination of the robust, hardware-centric protections of Trusted Execution Environments (TEEs) and the advanced algorithmic approaches including Consensus-based Distribution Verification (CDV) and Split-Learning (SL), we ensure that security and privacy are foundational pillars of the system.

I. Hardware Side: Trusted Execution Environments (TEEs)

-

Secure Node Operations: By establishing secure isolation zones within the nodes, TEEs protect sensitive processes and data from unauthorized access. This is essential in decentralized AI environments to prevent data breaches and manipulation.

-

Diverse TEE Implementations: Your strategy to use a variety of TEE technologies provides adaptability and resilience, ensuring that even nodes with limited resources can contribute to the network.

-

End-to-End Encryption: TEEs enable encryption of data throughout the network, maintaining data privacy from input to output.

-

Trust and Verification through Attestation: Nesa's network uses attestation protocols to establish decentralized trust. Nodes must validate their TEEs against predefined security standards, ensuring compliance and secure integration.

-

Secret Sharing Technique: The use of secret sharing enhances network security, preventing any one node from accessing the entire key.

-

Balance of Security and Inclusivity: While TEEs offer robust security, they might not always be available. Therefore, the system allows nodes without TEEs to function as validators with additional encryption measures.

II. Software/Algorithm Side: Model Verification

-

Consensus-based Distribution Verification (CDV): CDV measures the consensus of output distribution across inference nodes to ensure correct model execution and detect potential deviations.

-

Split-Learning (SL): SL protects user data by transferring intermediate embeddings instead of raw data between nodes, maintaining privacy.

-

Model Integrity Verification: Ensuring that each node accurately executes the intended model safeguards the system's integrity and reliability, maintains consistency, and avoids malicious modifications.

-

Optimization of Computational Resources: This helps prevent wastage of resources and manage operational costs effectively.

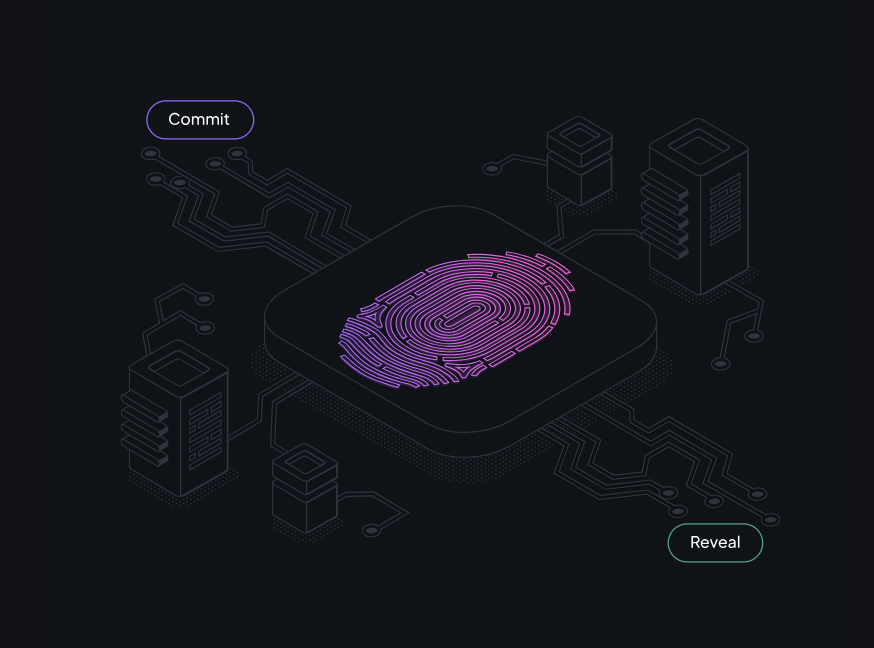

III. Hybrid Enhanced ZK Privacy

-

Commit-Reveal Paradigm: The two-phase transaction structure involves an initial commitment phase where nodes commit to their results without revealing the full details. This is followed by a reveal phase, where the actual computations and results are disclosed.

-

Incentivizing Honest Computations: By implementing the commit-reveal mechanism, nodes are incentivized to perform their computations honestly. This is because they must commit to their results before revealing them, ensuring they cannot alter their output after seeing the results of other nodes.

-

Safeguarding Against Dishonest Behavior: This approach helps prevent dishonest behavior such as free-riding, where a node might attempt to benefit from the efforts of other nodes without contributing fairly.

-

Zero-Knowledge Proofs: The system can leverage Zero-Knowledge proofs to further enhance privacy. In this way, nodes can prove that their computations are valid and follow the network's rules without revealing sensitive information.

-

Trust in Inference Results: By enforcing these mechanisms, users can trust the integrity of the inference results provided by the network. This trust is critical in decentralized settings where multiple nodes participate in computations.

-

Enhancing Privacy: The hybrid enhanced ZK privacy system provides users with strong privacy guarantees, as sensitive information is not unnecessarily shared during computations.

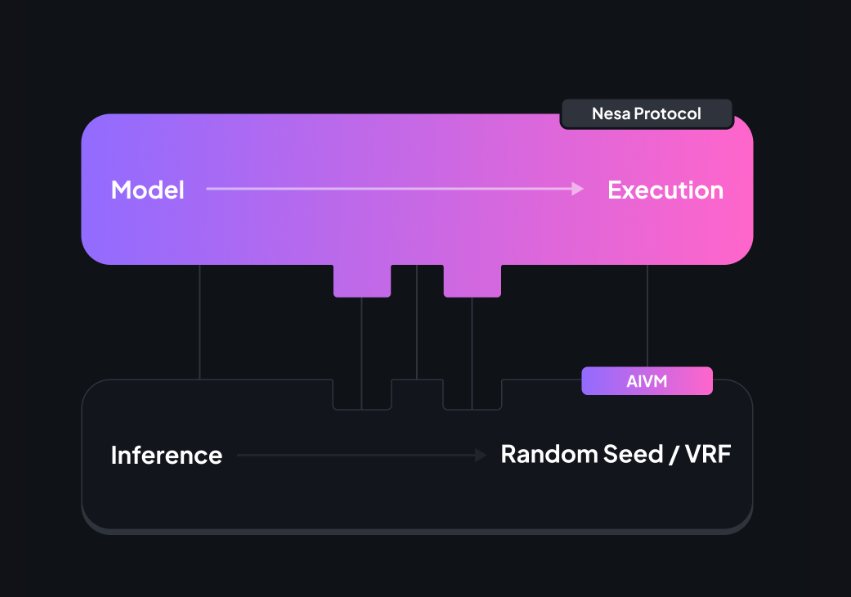

IV. VRF & Pseudo-random Seed

-

Fixing the Random Seed: By setting a specific random seed for all AI model inferences, the network ensures that any pseudo-random number generation follows the same sequence in every execution. This consistency helps maintain deterministic and reproducible results, allowing users and developers to rely on the network's outputs with confidence.

-

Integration of Verifiable Random Functions (VRFs): In scenarios where public randomness is necessary, the network uses VRFs. These functions generate randomness that is both unpredictable and provably unbiased. VRFs offer the following advantages:

-

Unpredictability: The output of a VRF is unpredictable, meaning that even if someone knows the input and the function, they cannot predict the output without running the function.

-

Provable Unbias: VRFs provide a proof of randomness, ensuring that the generated randomness is not biased or influenced by malicious parties.

-

Verifiability: The proof of randomness can be verified by other nodes or parties, ensuring trustworthiness in the generated random value.

Consistency and Trustworthiness: By using fixed random seeds and VRFs, the network enhances the consistency and trustworthiness of the inference process. This is particularly important in decentralized AI environments where multiple nodes work together and rely on each other's outputs.

-

-

Resilience to Manipulation: The use of fixed random seeds and VRFs makes it difficult for any node to manipulate the results of the inference process. This adds an extra layer of security and reliability to the network's operations.

-

Optimized Resource Usage: By achieving reproducible results, the network can avoid unnecessary recomputation and resource wastage. This efficiency helps maintain the overall health and sustainability of the network.

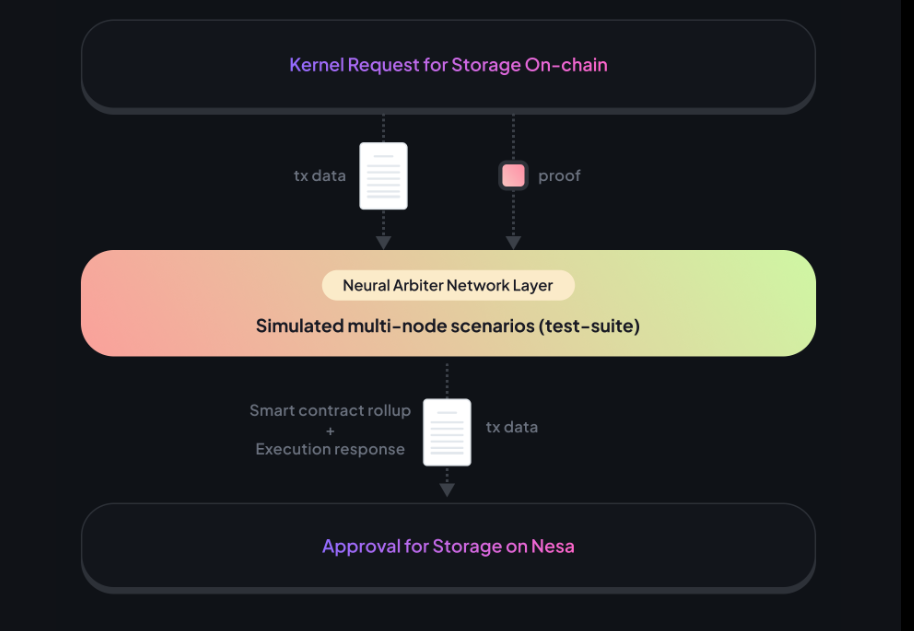

V. Kernel Validation Testing

The AIVM network ensures the reliability and robustness of its kernels through a rigorous validation process before storing them on the blockchain. This process involves a series of steps to verify the kernel's compliance with the network's standards and its consistency across different environments:

-

Compliance with Config Template:

The kernel must adhere to a predefined configuration template that specifies parameters such as input and output formats, expected behaviors, and operational requirements. This ensures uniformity and compatibility across the network.

-

Deterministic Execution Verification: The network performs a thorough examination of the kernel's execution to ensure it behaves deterministically. This means that, given the same inputs, the kernel consistently produces the same outputs regardless of where it is executed.

-

Simulated Multi-Node Testing: The kernel is subjected to simulated multi-node scenarios designed to replicate the conditions of a real-world distributed environment. This includes testing the kernel's performance and behavior when executed across multiple nodes with varying system configurations.

-

Neural Arbiter Network (NAN): A specialized Neural Arbiter Network oversees the validation process. NAN serves as an impartial arbiter, running a suite of tests to confirm the kernel's determinism and immunity to environmental variances.

-

Consistency Across Diverse Environments: The tests focus on assessing the kernel's ability to produce consistent results across different hardware, software, and network conditions. This is critical for maintaining the integrity and trustworthiness of the network's outputs.

-

Validation of Results: NAN evaluates the kernel's outputs against expected results to ensure it operates correctly and reliably. Any discrepancies or deviations from the expected outputs are flagged for further investigation.

-

Verification Process: Once the kernel passes all the tests, including those conducted by NAN, it is considered verified and ready for deployment. This step includes signing the kernel and storing it on the blockchain.

-

Blockchain Storage: After successful validation, the approved kernel is stored on the blockchain for use in the network. This adds a layer of transparency and immutability to the process, as the blockchain serves as a permanent record of the kernel's validation.

Overall, the combination of hardware and software approaches offers a robust, secure, and inclusive AI environment that maintains privacy, integrity, and efficiency in a decentralized network. By blending advanced algorithmic techniques with secure hardware-centric protections, your approach provides a solid foundation for Nesa's system.

评论 (0)